The following guide is meant to serve as a starting point for those who wish to gain a basic understanding of AI in the context of teaching and learning. Whether you embrace AI tools or limit their use, having foundational knowledge can help you make informed decisions in the classroom regarding the use of text and image-generating chatbots. Additional resources will be added as new content becomes available.

What is Artificial Intelligence (AI)?

According to Educause (2022), Artificial Intelligence refers to machines that perform tasks typically considered to require human thought and decision-making. Chatbots are a form of AI that utilize a set of algorithms to create text, images, code, audio, and video content. Generative AI tools such as ChatGPT radically challenge our previous notions about content authoring and creation. While one of the most popular GenAI tools in higher education discourse, ChatGPT is not the only Generative AI tool; examples include Claude, Gemini, Copilot, Perplexity AI, among others.

How do AI chatbots work?

As explained by the Yale Poorvu Center for Teaching and Learning, users engage in text-based conversations with AI chatbots by submitting prompts in natural language. In response, chatbots offer responses based on access to large datasets. Users can add follow-up prompts to fine-tune results and incorporate additional ideas. Because chatbots are trained to predict word associations, they present probable answers to queries. In some instances, the answers are wrong or fictional. (For example, ChatGPT could create sources that don’t exist.) This phenomenon is often referred to as an AI hallucination. The answers could also be accurate and helpful. As we move forward with AI tools, knowing how to assess the value of a generated response—and how to provide attribution to AI sources—are important literacy skills for faculty, staff, and students.

What implications does AI have for Academic Integrity?

When used in the wrong way, AI tools can threaten academic integrity and result in academic dishonesty. As noted on TCNJ’s Academic Integrity webpage, “Teachers, advisors, and classmates must be able to trust that the ideas students express, the data they present, and the work they submit are their own. Misrepresenting another’s work as one’s own prevents an opportunity to learn and violates this trust. The right of ownership to academic work is as important as the right of ownership over personal possessions.”

TCNJ’s Violations of Academic Integrity page includes an updated (2024) note on the use of AI, stating, “There are many ways AI can be used responsibly in an academic context (e.g.,to spell and grammar check, suggest brainstorming ideas for a paper topic, generate study guides, etc.). There are also instances of AI use that could be considered in violation of academic policy (e.g., using an AI tool to generate a paper instead of writing the paper yourself). A determination of what is acceptable use will vary from class to class and instructor to instructor. Please check with your instructor to ensure that your use of AI would be in accordance with the goals of your course.

How can I address AI use in my syllabus?

The syllabus is a logical place for initially addressing AI use and establishing boundaries in your own class. While it can be challenging to delineate the scope of GenAI in a single syllabus statement, it is essential to set a foundation for students because each class may operate differently regarding the spectrum of potential AI uses. If you have not yet established an AI syllabus statement—or want to revisit and refresh one you already have—the Stanford Center for Teaching and Learning Worksheet for Creating Your AI Syllabus Statement offers robust ideas for appropriate language.

If you plan to allow AI tools for certain tasks and not others, clarify those situations. Temple University offers examples of syllabus language for acceptable/unacceptable use of AI. Be sure to encourage students to ask for clarification if they are unsure about what might be acceptable. In cases where you are allowing AI use, consider reviewing different style guides for proper attribution with your students:

- APA: How to cite ChatGPT

- MLA: How do I cite generative AI in MLA style?

- Chicago: Citation, documentation of sources

If you do not feel that GenAI is aligned with your pedagogy, discipline, or course learning goals, there are ways to deter AI use in the classroom. It is critical to be transparent with students about these expectations. Harvard’s page on Academic Integrity and Teaching With(out) AI offers resources for faculty discouraging GenAI use in their classes. Similarly, Yale University’s AI Guidance for Teachers offers this simple sentence: “Collaboration with ChatGPT or other AI composition software is not permitted in this course.”

Syllabus Suggestions for Managing Use of GenAI

After you understand AI tools and decide on what you will and will not permit in your classroom, we encourage you to give consideration to some of the ways AI might be useful to growing student success and improving learning. AI can be used as a supportive tool to provide personalized learning and develop critical thinking skills. For a thoughtful examination on this topic, please visit MIT’s Teaching + Learning Lab Blog Series, Teaching & Learning with ChatGPT: Opportunity or Quagmire?

AI is impacting all parts of our lives, and guiding students to understand and use AI tools will prepare them to participate in charting the path forward for their personal and professional futures.

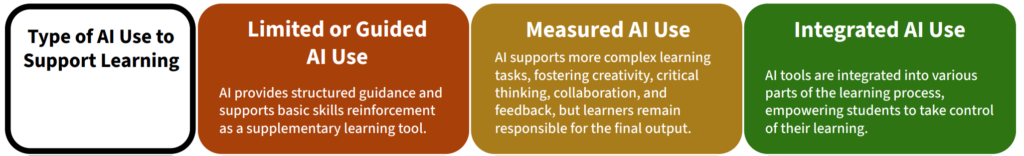

A Spectrum of Student AI Use in the Classroom (PDF) via Jourde, F. (2024), Barnum, B. (2023), and Ouyang (2021)

Declaration of AI Use: In your syllabus or other course materials, such as Canvas, you may consider establishing a norm around student declarations of AI use. A declaration of AI use is a statement outlining how a student used AI in their assignment. These declarations can be broad, such as a brief overview of the GenAI tools a student used, or more specific, such as asking students to complete prompts provided by the instructor with specific questions. The University of Cambridge School of Humanities and Social Sciences offers an example template for declaring the use of generative AI, requesting students respond to the following prompts:

- Which permitted use of generative AI are you acknowledging?

- Which generative AI tool did you use (name and version)?

- What prompt did you provide?

- What did you use the tool for?

- How have you used or changed the generative AI’s output?

Generally, GenAI declarations include some combination of the following:

- The specific AI tool or technology;

- How and when the AI tool was used over the course of an assignment;

- Specific prompts used and inputted into GenAI chatbots; and

- An explanation of how the output was used, including what parts are directly cited and what parts have been modified.

Students can attach such a declaration to the end of their assignment, in Canvas comments, or anywhere it is accessible to the faculty member.

Resources

- The College of New Jersey Task Force on Artificial Intelligence White Paper Report (PDF) (Last updated February 5, 2024)

- Artificial Intelligence—EDUCAUSE

- AI Guidance for Teachers: Yale Poorvu Center for Teaching and Learning

- Worksheet for Creating Your AI Syllabus Statement: Stanford Center for Teaching and Learning (PDF)

- Sample Syllabus Statements for the Use of AI Tools in Your Course: Temple University Center for the Advancement of Teaching (PDF)

- Academic Integrity and Teaching With(out) AI: Harvard College Office of Academic Integrity and Student Conduct

- Teaching & Learning with ChatGPT: Opportunity or Quagmire? MIT’s Teaching + Learning Lab Blog Series

- Template declaration of the use of generative artificial intelligence: University of Cambridge School of Humanities and Social Sciences

Additional Reading

- AI and the Future of Teaching and Learning (Department of Education, May 2023)

- Guidelines for Educators (OpenAI)

- 4 Steps to Help You Plan for ChatGPT in Your Classroom (Darby, Chronicle of Higher Education, 2023)

- For Education, ChatGPT Holds Promise—and Creates Problems (EdSurge, 2023)

- Writing in the Age of AI Workshop Clip 3: Teaching Writing with AI in Mind by John Bradley (Vanderbilt Center for Teaching, 2023)

TCNJ Faculty Highlights

Hu, Y. (2024). Generative AI, communication, and stereotypes: Learning critical AI literacy through experience, analysis, and reflection. Communication Teacher, Online First Publication. https://doi.org/10.1080/17404622.2024.2397065

Hu, Y. & Kurylo, A. D. (2024). Screaming out loud in the communication classroom: Asian stereotypes and the fallibility of image generating artificial intelligence (AI). In S. Elmoudden & J. Wrench (Eds.), The Role of Generative AI in the Communication Classroom (pp. 262-283). IGI Global. https://doi.org/10.4018/979-8-3693-0831-8.ch012